Solo stakers: The backbone of Ethereum

This post discusses the results of the work we did in classifying Ethereum validators along the likelihood that they are a solo staker. We classified approximately 6.5% of validator indices on the Beacon Chain as solo stakers. The analysis we ran indexes data from Beacon Chain Genesis to appox. the end of 2022.

We’d like to thank LEGO (Lido DAO’s grants org), for supporting this work with a grant, and @IsdrsP, @TimBeiko and @adiasg for reviewing the post.

If you’d like to talk to us about working with this dataset, get in touch via hello@rated.network.

Introduction and aims

Solo stakers have since the very early days of the Beacon Chain been an integral part of Ethereum’s infrastructure–and it is so by no accident. Enabling individuals to participate in securing Ethereum has been a design goal of the whole endeavour; it is how Ethereum infrastructure becomes truly unstoppable. Be that as it may, Proof of Stake is a security algorithm that takes value-at-risk (the stake) and operational expertise as the input to produce security. It then follows that if the protocol does not empower individuals to participate, concentration will materialize in both the capital and expertise vectors.

Given how the Beacon Chain is architected, identifying validators to their operators does not come as a feature (or anti-feature) out of the box. The state of play in mapping validators to operators presently is a complex puzzle of on-chain and off-chain registries that naturally gravitate towards indexing operators with large footprint. The result has been a wild dispersion of best estimates as to how much of the validator population on the Beacon Chain .

The aim of this work is to classify solo stakers on the Ethereum Beacon Chain. Much of the work completed here aims to cluster validators into groups and then assign some confidence that that cluster represents a solo staker. We then use machine learning to identify any irregularities in our labelling and provide a consistent confidence score for if a staker is solo.

Defining “solo stakers”

The first stop in our exploration was to define the profile that we will be indexing on; the collection of attributes that define a solo staker–outside of which a validator operator ceases to be considered as such. For the purposes of the exercise, we have tried to identify solo stakers using the following attributes:

Solo stakers are independent operators, not part of a major organization. As such a solo staker might routinely lack 24/7 monitoring and SLAs and might experience more frequent and longer downtime periods, seen less frequently among professional operators. This manifests itself as volatility in the metrics Rated tracks. Caveat: the top performers have little volatility and are indistinguishable from the pros.

Solo stakers run infra on-prem or in a VPS, but mostly on-prem. Some more sophisticated use cloud services, but that should not be the rule. Caveat: this information is not readily available. Though we have built infrastructure that enables us to contextualise a significant part of the networking layer of Ethereum, there are parts that are completely opaque. This makes network level data not suitable for ML purposes.

Solo stakers fund all (or some if in Rocketpool) of the stake through their own means. This is usually via a generic wallet that is used for multiple purposes. This wallet doesn’t transact programmatically (eg not every hour), and certainly doesn’t transact in volumes (eg 10K+ ETH). Caveat: platforms such as Allnodes allow users to essentially upload their keys, with no on-chain intermediary and thus we cannot rely on EOA level features alone.

Solo stakers, in their majority, run fewer than 200 validators each. Over this level, even if the entity is unknown we assume some sophistication that’s beyond the hobbyist/amateur level. Caveat: there are a few savants out there who manage to run 300 validators from a DappNode.

Aside from the main attributes, to help with the heuristic definition of the solo staker profile, we added a condition in that solos are more likely to use more unique graffitis. Graffitis that appear seldomly (e.g. in less than 10 validators), and without following a certain pattern, are unlikely to be part of a major organisation. Caveat: Some VaaS providers allow users to customise their graffiti.

Validator selection

The next step in the project was to define a sample of validators that we would operate the analysis on, with the goal being to enable differentiating individual behaviour over the population. To get reliable results we needed validators which have been active for a minimal period.

After some experimentation we found 90 days as the determined time window worked well. It allowed for enough time for patterns in behaviour to emerge. To ensure that examined validators were active throughout the selected window, only validators activated prior to 27/11/2022 were used. The total number of validators in this set is 500,000.

Dataset features

We then proceeded to experiment with a large suite of attributes (60+ different metrics on a per validator index basis) in order to arrive at the highest signal ones, that would ultimately help us identify this “differentiated behaviour” that we were looking for.

We found aggregated features were very strong indicators of staker status and ultimately arrived at the following metrics as the keystone attributes that would later inform the modelling toolchain; (i) performance metrics like effectiveness (see our docs for a walkthrough of effectiveness), inclusion delay, missed attestations and more, (ii) consensus client (using blockprint), (iii) number of validators associated with a deposit address and withdrawal credentials, (iv) total incoming/outgoing gas for the deposit address, and (v) validator index (not used in the final model, but a useful feature when building inferred features due to the way some operators do bulk validator creation.

We then approached the project in the following two distinct stages.

Stage 1: Soft labelling

Our objective in stage 1 was to provide a “soft label” (or “prior”) for each validator that could be used to teach patterns to by a final classifier. Of the 500k validators, 350k belong to node operators that Rated Labs can already confidently label as professionals. We have built this database via OS databases, disclosures of operators to Rated, and on-chain registries. 250k of those belong to just the top 25 known node operators.

Our first step was to build more complete profiles of these known operators, by attaching untagged validators to those profiles.

Figure 1: Heatmap Dimensionality Reduction (UMAP) of the input features against predicted confidence of solo/not-solo. Blue is high confidence solo, Red is high confidence not-solo. Yellows and Greens are less confident. Note that while there are distinct “islands” of solo/not-solo in most cases it is a sliding scale.

We utilised a 5-fold cross-validation technique to train an XGBoost model, with the objective of determining which node operator a given validator closely mirrored. Our focus was primarily on the top 25 node operators, while we grouped all other recognized operators into an "other" class for the sake of simplicity.

This model was advantageous in several aspects. First, it enabled us to make comparative statements like, "Validator X demonstrates behaviour strikingly similar to node operator Y, yet distinctly different from operator Z." This characteristic was invaluable for in-depth subsequent analysis.

Second, the model permitted us to tentatively assign validators to node operators based on the strength of their similarity to known node operators - a practice we referred to as "soft-labelling". We only applied this soft-label when the similarity value was exceptionally high, i.e., 0.9 or more. For validators that did not clearly correlate with any known operator, we expected their similarity values to be evenly distributed at approximately 1/26 (around 0.04) for all classes.

It's important to note, however, that this preliminary assignment or "soft-label" is not considered a definitive label. It can be adjusted or overridden during the next stage of training.

💡 The 25 operators were picked because we want lots of examples of each node operator to train our model against, and for the top 25 there are thousands. When you start getting to 50 the numbers drop a lot (long tail).

This left us with around 50k validator indices (out of the 500k) that most likely can be classified as solo stakers. To help label these further we used an unsupervised technique (HDBSCAN) to group validators into clusters. This clustering technique allowed us to say that a collection of validators is in some sense similar to one another.

We then manually checked why that grouping was made and when appropriate label a whole group at once as solo/not-solo. While this technique is empirical, it is a powerful way to group and then label large amounts of unknown data. Experimentally we tuned this to give us 7 clusters. 10 validators from each cluster were subsampled and checked on rated.network. We were able to easily identify 3 clusters of whales using this method.

In the final step of Stage 1, our team manually checked a few dozen results.

Stage 2: Classification

Now with soft labels on hand, we trained the final classifier using XGBoost. We optimised our models with Bayesian optimization over a minimum of 25 trials. It is important to understand that at this stage produced labels we were only partly confident in. To train a classifier well, we needed to represent this lack of confidence during training.

We did this using label smoothing, done by replacing our less confident labels with 0.95 (from 1) for solo and 0.05 (from 0) for not solo. We found cross entropy worked well as a loss. For our optimisation metric we used F1. Using this process we didn’t penalise the model too much for incorrect labels. This gave us our final output.

Results

The output of the pipeline we described in previous sections was organised in a database that’s classified in deposit address level groupings of validator indices, each with an attached confidence score on the “solo/not-solo” spectrum. The figure below shows the distribution of confidence levels across all the deposit addresses that the starting 500k validator indices map back to.

Figure 2: Solo-prediction confidence level histograms across deposit address level groupings on the 500k validators we analysed; 0 implies high confidence not-solo, while 1 implies the opposite.

As is indicative from the plot, our final model makes very confident predictions. This is helpful as we expect the user of the output to consider everything above a chosen threshold “probably solo” and the strongly confident predictions make the impact of a “bad threshold” lower.

Figure 3: Solo-prediction confidence level histograms across entity level groupings.

Figure 3 is a subsample of a larger piece of work we did in post-processing to review whether predicted outcomes match to heuristic expectations about entities that might be associated strongly with solo stakers (or not). The validator indices (by deposit address) that printed Avado or Dappnode graffitis in their proposals, are predicted to be high confidence solos in their majority, Rocketpool is split in two (e.g. due to individuals running infrastructure on providers like Allnodes), and Coinbase associated validators are confidently classified as not-solos, in their crushing majority.

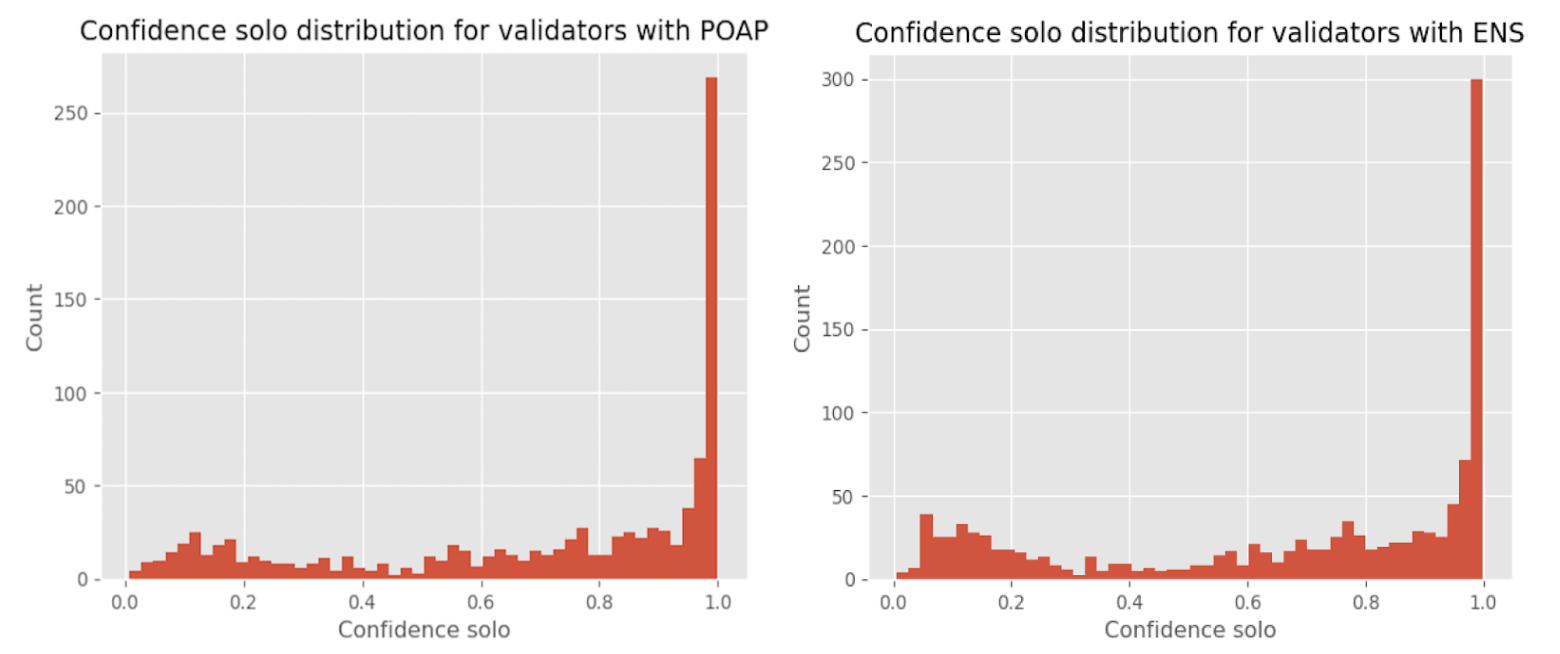

Figure 4: Solo-prediction confidence interval for POAP holders and ENS mappings

Figure 4 depicts the results of an analysis targeting deposit addresses that have received various POAPs the solo community has distributed (left), as well as deposit addresses that map to known ENS names. Similar as above, the majority of validators behind those addresses are predicted to be solo-stakers, with a smaller number distributed across the spectrum (which again is explained by the prevalence of staking providers such as Allnodes in the various staking communities).

Summary results

Of the 500k validators, we estimate 6.5% of validators (by index/pubkey) as solo based on a confidence score of 50% or greater.

3.3% were strongly predicted as solo with 99% confidence or above, 1.1% were 95% to 99%, 0.35% were 90% to 95%.

93.5% were below 50% confidence as solo and 45.9% under 1%.

In post-processing we appended several columns to the deposit address’ key that might point to associations to the ENS registry, staking community related POAPs and to entities like Rocketpool, Dappnode or Avado, going by graffiti. We did this to help add some colour to the accuracy of the prediction, and came up with results that heuristically confirmed the model’s predictions.

💡 Fun fact: We also went back to the earlier stages of the pipeline and introduced these attributes in the initial set of variables for consideration to yield high signal attributes. What we found was that these introduced no marginal improvement to the signal that the highest value attributes produced.

Concluding remarks

Over the 2.5 years it has been active, the Beacon Chain has become the de-facto leader in terms of the USD value of active stake securing a network, by an order of magnitude. Enabling solo validation has been a core design principle of the Ethereum Beacon Chain. From where we stand, we see this 6.5% of stake represented by what we have classified as solo stakers as strong showing in terms of resiliency. This is a highly diverse and distributed set of validators that provide a strong backbone for the network as a whole.

This idea is further compounded upon preliminary findings from a probe we have run on the p2p layer of the network. Early data imply that the number of validating Beacon Nodes that might map to solo stakers, range from 35% to a whopping 75% of the whole, out of a total of approx. 3000 nodes we’ve been able to parse in the analysis. We will be following up with a post that discusses our findings there in the coming weeks.

We hope the community at large will find this piece of work valuable, and equally that it sparks a lively discussion around solo stakers and their broader role in the Ethereum ecosystem.

About Rated

Rated was founded in 2022, by Elias Simos and Aris Koliopoulos. Elias was previously a Protocol Specialist at Coinbase (via the Bison Trails acquisition), while Aris spent the last 5 years building out social media and content platforms for tens of millions of users at Drivetribe.

Get in touch via hello@rated.network, and check out our job openings here.